I just want that other queries will modify them when I’m reading it. UPDLOCK acquires a modify lock telling the system it intends to modify the data but in fact I won’t modify it. Reading through other blogs I have seen following recipe: INSERT INTO. Could it be that the INSERT locks are not applied to the SELECT tables although being the same table? My SELECT query has also a part in it where it checks the very same table, I’m inserting data into for records that already exists. How could that be? It must be something with the SELECT query. WITH (TABLOCKX)īut somehow, I still got duplicates in my hubs and links. My statement looked usually like that: INSERT INTO. TABLOCK will try to grab a „shared“ lock on that table while the X gets an exclusive lock. But I want to be sure that no other query sneaks into a running query manipulating something in between. By default, SQL Server tries to lock as little as possible. To combat my issue, I rather use table hints. Sometimes I add FORCE ORDER or HASH JOIN to it, depending on performance.

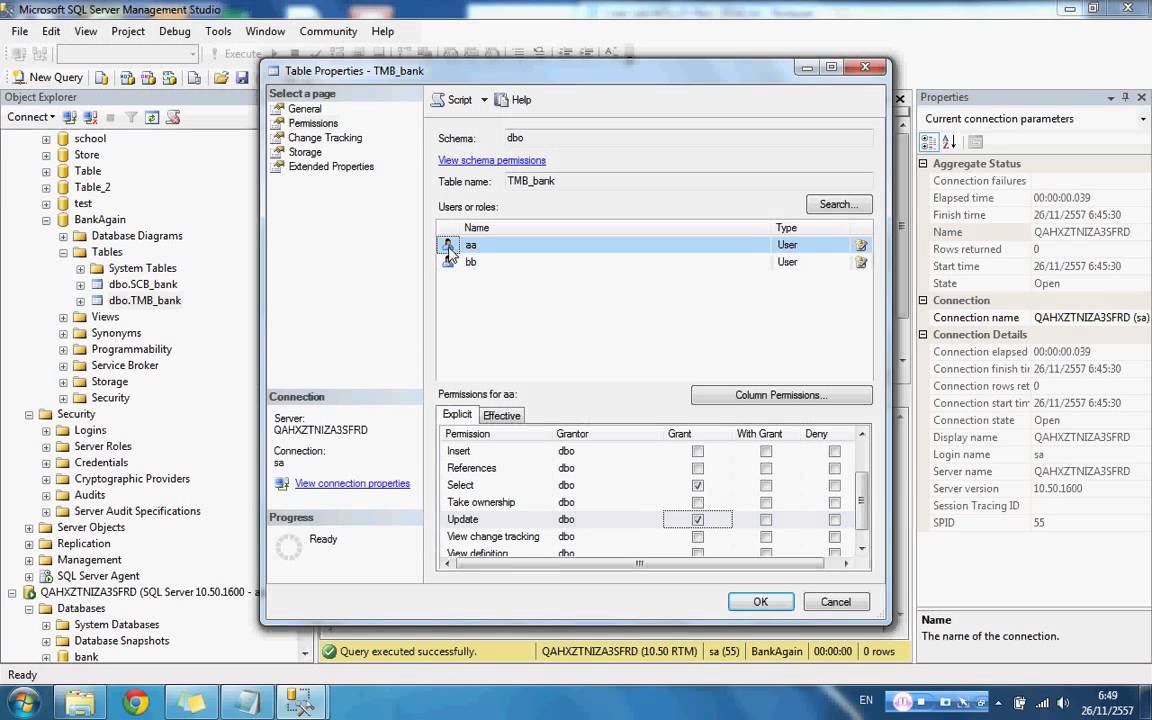

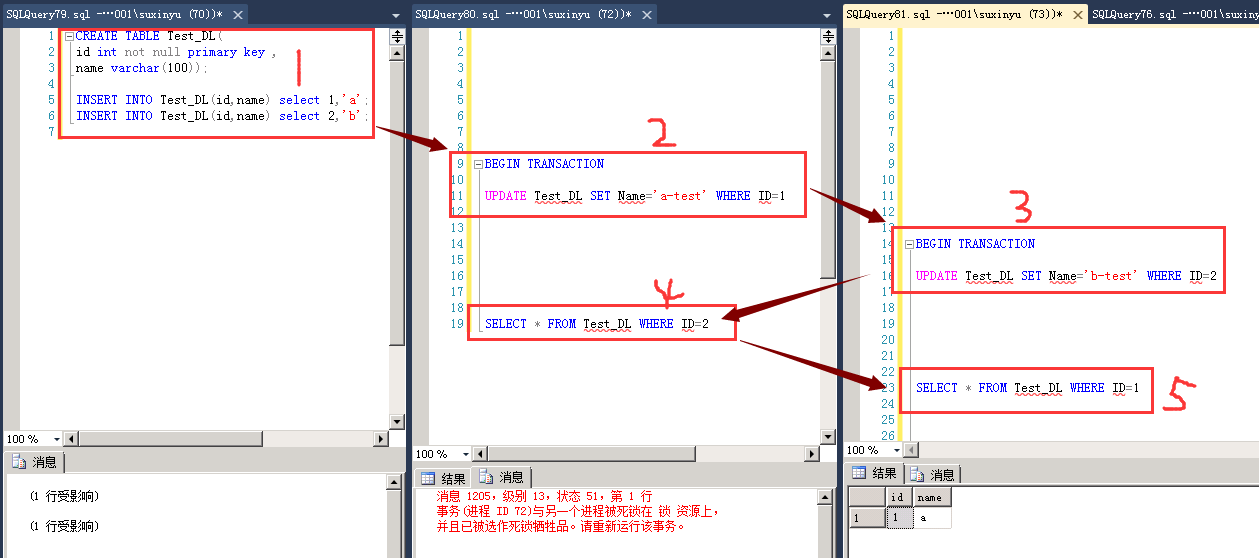

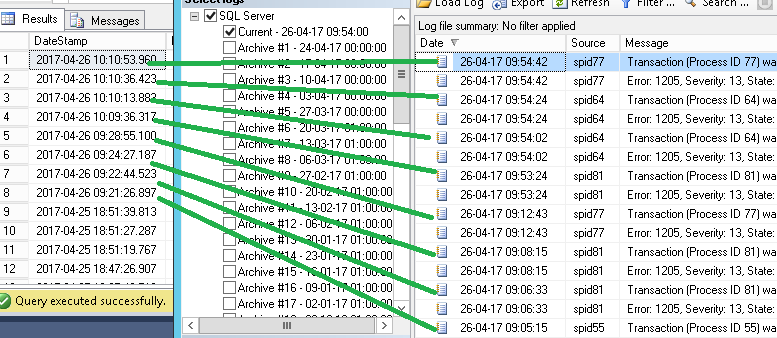

As you know, by default I add RECOMPILE to it to enforce getting the best plan for every data set instead of recycling old plans. Query hints are added at the end with OPTION(something). Some are affecting the whole query manipulating the execution plan (query hints) and some the queried table (table hints). There are a lot of hints, which we can add to a query. Hints are another way to manipulate the SQL Servers decision engine. But I got the issue solved about having duplicates in hubs and links. I was hit with a lot of deadlock errors in the coming weeks. I added the following statement to my scripts: SET TRANSACTION ISOLATION LEVEL SERIALIZABLEĪll following transactions are then executed with that isolation level. With restartable jobs this isn’t an issue. SQL Server terminates 1 query telling it that is a „victim of deadlock“. Sometimes 2 queries want to have access to the same resource, but queuing won’t help as other resources are blocked too. A deadlock is an issue when SQL Server tries to find out what to do next in a safe way. Many websites express, that SERIALIZABLE is the most secure option, but has a very hard disadvantage – it leads to deadlocks. Sounds weird, but I couldn’t help suspecting. Especially in fact loading with looking up dimension keys. I suspected that although I had all those table locks (see below) in place, data got changed between an INSERT and its SELECT statement. I don’t want to explain all the nifty details about each option. My search for a solution led me to learn more about Transaction Isolation Level. This layout I had in place, when I discovered duplicate entries. The transaction is written like that: BEGIN TRANSACTIONīy default I add OPTION(RECOMPILE) after fighting against bad execution plans. With Data Vault it is rather a usual pattern. In the data area I didn’t respect to work with transactions for some time, as I was usually transforming data from source to target, hardly inserting data in parallel into the same table. Working with transactions is usually something for applications having SQL Server as a backend, to ensure, that the data is written correctly to the database spanning multiple table manipulations. There are some methods to influence its decision making.

#Page lock deadlock sql server update#

Therefore, this is a little sum up about my lessons learnt.ĭepending on the number of queries running at the same time with SELECT, UPDATE and DELETE, SQL Server decides, which data is locked, read, waited for, overwritten or inserted. I must admit, I’m not the export in that area. Maybe the unique constraint indexes have additional functions when loading data to it. Although I have used all measures below, it happened. This was the reason to investigate further about why it did happen.

Lately after working on a project with huge amount of data, I got hit occasionally with duplicate rows in my hubs and links. But I have a regular report which checks, if the constrains are still intact. The application should make sure that everything is fine. At least maybe for the next year until I find another solution.Īs you know I dropped all indexes of my data warehouse and therefore also unique constrains on my tables. After the last Index blog post here another technical issue I recently thought to have solved.

0 kommentar(er)

0 kommentar(er)